Final Project Play Testing: Haenyeo

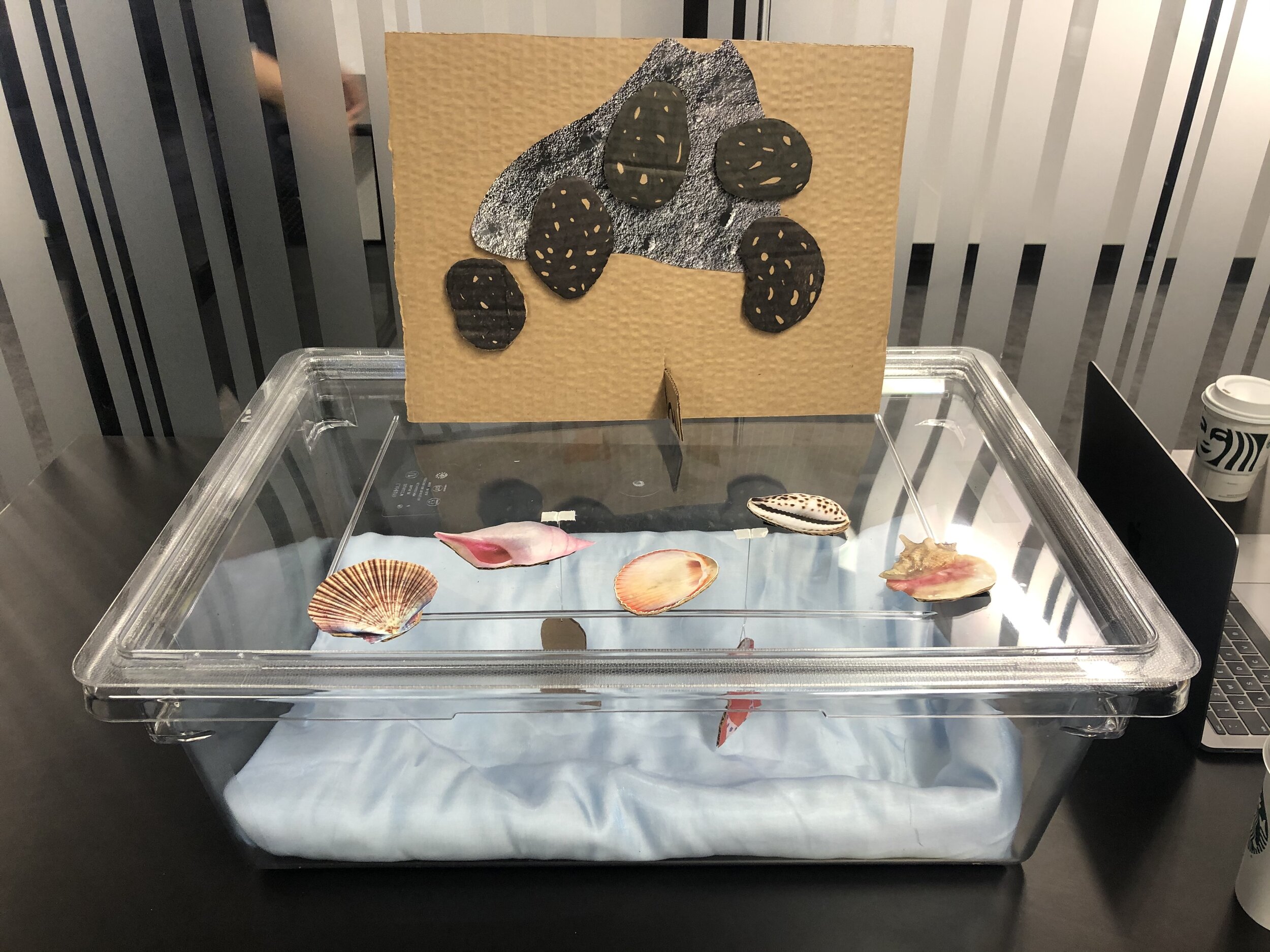

During our play test, we garnered valuable feedback from our classmates. Using some of the props we had already purchased and models made out of cardboard, we primarily tested the users’ intuition to bring the shells and rocks towards their ears to trigger sounds snippets. We also tested out different types of sound snippets, spanning from ambient noise of ocean waves to narrated information about haenyeo and Jeju Island. Shown below is our set up for the play test.

Here are some key takeaways from our testing:

Loud and hectic environment makes listening to sounds from computer speakers difficult

Listening directly to small speakers embedded in the objects would make it both more obvious for the interaction and easier to focus on the sound

Interaction with rock is not obvious — would not expect it to play sound

Possibly add instructions in the sound tracks played by the shells, which are more intuitive

Linear story does not work well with setup

Sounds as “clues” or individual stories?

More interaction

Triggering lights with touch on volcano model

Move haenyeo models with motors?

More types of objects to tell story? (buoy, net, goggles)

Slow down the waves when a sound is playing to allow user to pay attention to sound

Based on these results, we decided to adjust our project a little by focusing on the story we want to tell. We came up with two parallel stories, one of haenyeo living in Jeju Island and one of a housewife living in Seoul city, in order to highlight the matriarchal role of haenyeo against the strongly defined gender roles in South Korea. We decided to tell the stories in non-linear snippets through various relevant objects that have mini speakers embedded in them.

As we started testing the electronics involved with playing multiple sounds snippets with an SD card and small speakers on an Ardunio, we quickly faced obstacles of sound quality and sound track management. Because our project now revolved around playing narrated stories instead of ambient sounds, it was important for us to deliver the information through audible sound tracks. Consequently, we decided to use headphones instead of small speakers.

To mimic the action of “listening” to the objects with headphones on, we came up with the idea to use RFID chips and readers to trigger the sounds in the headphones. We would mount the RFID reader on to the side of the headphones so that the user will need to bring up the object, attached to an RFID chip, to the side of the headphones to hear the corresponding story. This setup also turned out to be much more reliable, as we could play the sound files directly from a computer with Processing code.